Searching for the Root of Short-Term Memory

Before every pitch in a baseball game, the pitcher awaits a signal from the catcher, visualizes the chosen pitch, and lets loose the ball. How does the pitcher’s brain hold on to the catcher’s signal during the seconds that elapse between signal and action? This basic aspect of short-term memory is still something of a mystery to neuroscientists. Individual neurons probably aren’t responsible — they represent information on very short timescales, firing an action potential and returning to their baseline state in milliseconds. The answer likely lies in the architecture of neural circuits, cells grouped together in such a way that they can hold on to information over longer durations. But despite decades-old theories describing how this might work, the precise mechanisms are still hotly debated.

Karel Svoboda, a neuroscientist at the Howard Hughes Medical Institute’s Janelia Research Campus in Ashburn, Virginia, has spent the last 10 years tackling this question. His lab studies motor planning — what happens in the brain in the time that passes between receiving information from the outside world and acting on that information. Neural activity during this time period, known as preparatory activity, can be thought of as a form of short-term memory, representing relevant information until the animal makes a decision.

Svoboda and his collaborators study this process in mice as they perform a delayed-response task, where the animal learns to move left or right — after a pause — in response to a sound. By combining different technologies, including high-density neural recording and optogenetics, Svoboda’s group has been able to narrow down the potential mechanisms responsible for preparatory activity.

Svoboda, an investigator with the Simons Collaboration on the Global Brain, described his work in a special lecture, Neural Mechanisms of Short-Term Memory and Motor Planning, at the Society for Neuroscience meeting in Chicago in October. He talked with the SCGB about his approach, some key experiments, and what he thinks the field’s next steps should be. An edited version of the conversation follows.

What is preparatory motor planning? Why is it important?

Preparatory activity is effectively the neural correlate of motor planning. The basic observation, first described in the 1970s, is that neurons in the parietal and motor cortex are active long before the onset of movement. This activity can predict specific kinds of movements — one can read out the movement that an animal will make, often seconds before the movement is executed. It was one of the first cognitive phenomena that had neural correlates.

In lab situations, this kind of preparatory activity can be thought of as short-term memory — it links an instruction in the past to a movement in the future. I have always been fascinated by information storage at a timescale of seconds. How are certain patterns of neural activity internally generated and internally maintained? This is of course a key question for the SCGB. Neural circuits can hold a state variable in mind so that the animal can make more adaptive actions. How do circuits do this? What mechanisms might maintain this kind of preparatory activity in the brain?

How do you tackle these questions?

Most of the early research on this was done with primates. We developed a paradigm to study it in mice. We can bring in more incisive biophysical tools to bear on the problem, including whole-cell recordings, an approach rarely used in systems neuroscience that allows you to measure and manipulate the membrane potential of neurons. We also use optogenetics, large-scale extracellular recordings, behavioral manipulation, and so forth.

Theorists have thought a lot about how preparatory activity might be maintained in neural circuits, and there are quite a few models out there. We made a decision tree of the different kinds of models and asked how to distinguish between them. We worked our way through the decision tree, designing experiments to falsify different kinds of models, and have greatly narrowed down the list of plausible models.

You have focused your efforts on a part of the brain called the anterior lateral motor cortex (ALM). How did you settle on this region?

We took an unbiased approach, which is very important and difficult to do in most model systems — it’s doable in mice, zebra fish and flies; that’s about it. We inactivated different brain regions during the memory epoch of the behavioral task — the time between the tone and the subsequent movement — and looked at which regions had a big impact on the outcome. The ALM popped out as by far the most prominent player. Imaging and electrophysiology experiments show that there was preparatory activity in the ALM that predicts future movement. In fact, preparatory activity was more pronounced in the ALM than in other areas.

It’s worth mentioning that in these kinds of tasks, you can detect behavior-related activity in many brain regions, probably all brain regions. Everything is connected to everything else by five to six synapses at most. So there are reflections everywhere. But not all of this activity is used for behavior. Inactivation experiments help identify the most important brain regions, and we should probably do these kinds of experiments more often.

What does preparatory motor activity look like in the ALM?

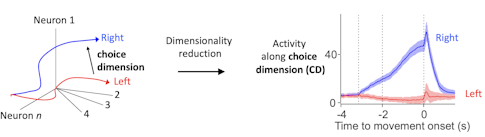

Individual neurons show different types of activity patterns. Some show preparatory activity early, some late. Some ramp up, some ramp down. Most neurons are selective for specific movements. But these individual neurons are part of a larger population. We wanted to look at which features of population activity are critical for behavior. Does the population encode the animal’s choice, such as its decision to move left or right? Or does it encode more specific aspects of movement? That requires a more refined way to look at the coupling between neural activity and behavior.

We put a lot of effort into recording large populations of neurons, generating a potentially high-dimensional representation of population activity — where each dimension corresponds to the behavior of one neuron. That allows you to correlate the high-dimensional pattern with behavior on individual trials.

How do you know what these activity patterns mean? How do you use them to predict the animal’s subsequent movement?

When you look at activity in high-dimensional space, you try to find the relevant features of activity that correlate with behavior. In our task, we identify the choice dimension, a direction in activity space that contains most of the dynamics relevant to future behavior. We calculate it with a simple linear algebra trick. This choice dimension then allows us to visualize what’s going on.

Activity along the choice dimension is directly related to movement direction and reaction time. On each trial, the trajectory of neural activity is slightly different. The location of population activity along the choice dimension determines if the animal is more likely to move in one direction versus the other and also how fast the movement will be.

Since there is an excellent correlation between behavioral variables and this simple representation of neural activity, we are likely looking at something relevant to the animal. Extracting the relevant features of neural activity is a big topic in the field now and is related to efforts to find the relevant manifold in high-dimensional spaces that the brain cares about, something several SCGB PIs are working on. [For some examples, see “Uncovering Hidden Dimensions in Brain Signals.”]

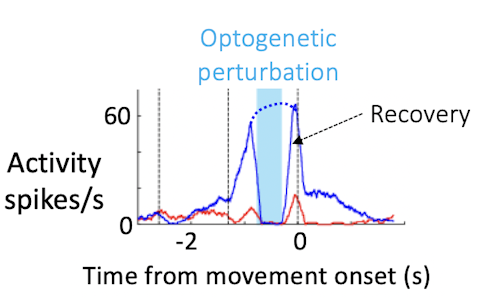

You use optogenetics to modify neural activity within the population, thereby altering neural trajectories. Why is this useful?

We know that the ALM is an important player in preparatory activity. But do activity trajectories during the delay actually instruct aspects of movement later on? Combining perturbation and high-density recording with behavior allows us to address this question more directly. We can use optogenetics to perturb neural activity and measure changes in activity trajectories and related changes in behavior for each trial.

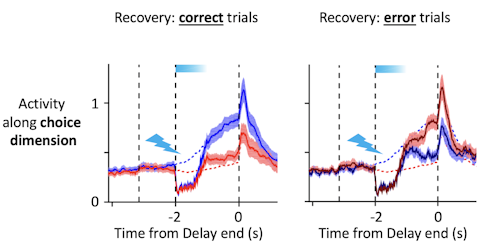

When we do crude perturbations, manipulating hundreds of neurons, trajectories still recover in recognizable ways. Rather than moving to a fundamentally new trajectory, the neurons either return to the path they were on before the perturbation or jump to the trajectory of the other trial type, which corresponds to the incorrect choice. This is probably due to constraints in the circuit. How the trajectories recover links neatly to behavior — the structure of the trajectories determines the direction of the movement, the speed of the movement, and success or failure at the level of individual trials.

These experiments convinced us that neural dynamics during the delay epoch really instruct what the animal will do seconds later. Population activity shapes not only the animal’s choice, but also detailed aspects of behavior, such as the timing, direction and vigor of movement. That’s strong evidence that the ALM population is a key player in movement preparation.

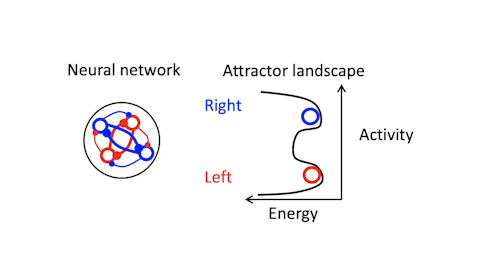

How have you used perturbations to test different models of sustained activity/short-term memory?

The models we talk about are conceptual — they are math models that effectively encapsulate the principles of neural dynamics that underlie maintenance of short-term memory. One example is a synfire chain model for short-term memory, where one set of neurons activates another, which activates another. There are also various attractor-based models, where recurrent connections between neurons maintain activity. In attractor networks, dynamics settle into a stable pattern, converging on a fixed point, for example. In neural systems, this means neural activity moves toward a certain part of population space.

To convince ourselves that single neurons themselves do not maintain memory, we first did experiments using whole-cell recording. We also did an experiment suggesting that sequential activity of neurons is not a plausible model. That brought us to attractor models, many of which go back decades. [For more on this, see “How the Brain Holds On to Working Memory.”]

Trajectories recover to one of two clusters after being perturbed, suggesting that there is one discrete attractor for each movement direction. These attractors exist at the same time, and the network can switch from one attractor to another. Using appropriately calibrated perturbations, we found that switching attractors also switches movement directions. Pushing the trajectory from one attractor toward another changes the animal’s choice.

The design of the perturbation is key. My collaborator, Hidehiko Inagaki [now at the Max Planck Florida Institute for Neuroscience], found conditions under which the trajectories transiently overlap after perturbation but then recover to one unperturbed trajectory or the other with high probability.

Does that mean you can convince a mouse that wanted to move left to move right?

Yes. After the perturbation, the network sits at a knife’s edge for a few milliseconds, then snaps into the left or right attractor. Depending on what the animal intended to do before the perturbation, this will result in a correct or incorrect choice.

You said that in the 1990s, it was difficult to see a path where you could distinguish different network models. What has changed?

The key is that we can now record from many neurons at once, which allows us to calculate these trajectories. We think that in this kind of simple behavior, 30 neurons are probably all you need.

Equally important are the manipulation experiments. Optogenetics allows us to perturb activity with relative precision. On an individual trial, we can watch what happens to the trajectory after perturbation and interpret those dynamics in the context of behavior.

Dimensionality-reduction methods are also essential. The math itself is straightforward, but some methods are computationally intensive. We can now easily do these analyses on a laptop, but that wasn’t the case 20 to 30 years ago.

What issues will be most important to tackle next?

Ultimately, we have to figure out how computations are implemented at the level of circuits made with actual neurons. I think the next 10 to 20 years will be defined by how to link neural dynamics and computation to defined neural networks. I think very little is happening so far in terms of integrating our exploding knowledge of anatomy into models. Why does the brain have so many distinct cell types? What are their roles? Are they even meaningful questions to ask? How do we link structural biology to dynamics, computation and theory? I think this is something that needs to happen. My colleagues at Janelia and SCGB investigator Larry Abbott are moving in this direction in the fruit fly. I think a lot will happen in the fly that will show us how to use anatomy to learn about neural computation.

We also need new modeling approaches and a new generation of theorists to grapple with this complexity. The current generation of theorists often thinks in physics terms, trying to extract simple principles. But the logic of neural circuits might be a lot messier. Studying the brain may be a reverse engineering problem, rather than a physics-style theory problem.