Verbal Working Memory Found to Depend on Two Neural Networks, With Implications for Artificial Intelligence

Two different networks are responsible for processing information in working memory, according to new research published in Nature Neuroscience in December. The findings could have implications for artificial intelligence (AI), says senior author Bijan Pesaran, a neuroscientist at the Center for Neural Science at New York University (NYU) and an investigator with the Simons Collaboration on the Global Brain.

According to a press release from NYU:

Past studies had emphasized how a single “Central Executive” oversaw manipulations of information stored in working memory. The distinction is an important one, Pesaran observes, because current AI systems that replicate human speech typically assume computations involved in verbal working memory are performed by a single neural network.

“Artificial intelligence is gradually becoming more human like,” says Pesaran. “By better understanding intelligence in the human brain, we can suggest ways to improve AI systems. Our work indicates that AI systems with multiple working memory networks are needed.”

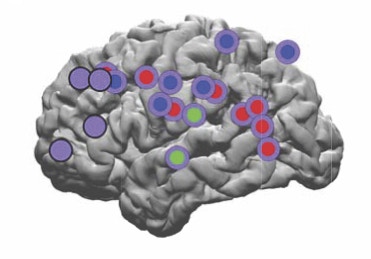

Researchers were able to explore the inner workings of the human brain by studying people being monitored for epilepsy — these individuals have electrical signals recorded directly from the surface of the brain. Participants performed a speech task where they either repeated a given sound or made a different sound, following a set of predetermined rules. Pesaran and his collaborators decoded neural activity as participants applied the rules to the sounds held in their working memory. The results showed the process was governed by two different networks.

The press release explains:

One network encoded the rule that the patients were using to guide the utterances they made (the rule network) … The process of using the rule to transform the sounds into speech was handled by a second, transformation network. Activity in this network could be used to track how the input (what was heard) was being converted into the output (what was spoken) moment-by-moment.

Translating what you hear in one language to speak in another language involves applying a similar set of abstract rules. People with impairments of verbal working memory find it difficult to learn new languages. Modern intelligent machines also have trouble learning languages, the researchers add. “This research examines a uniquely human form of intelligence, verbal working memory, and suggests new ways to make machines more intelligent,” Pesaran says.