Biological and Machine Intelligence: Connecting the Dots

When we see a dog, the neurons in our brain fire rapidly to tell us what we’re looking at. While it all happens in less than the blink of an eye, the process itself is incredibly complex. At the Flatiron Institute, SueYeon Chung is working to figure out how our brains efficiently process such information.

In her work, Chung applies a combination of statistical physics, applied math and machine learning. Her goal? To build mathematical frameworks for understanding how neurons collectively encode and transfer information in a split second. Such insights into our neurobiology can also improve artificial intelligence (AI). For example, applying the characteristics of biological neurons to AI programs can lead to more efficient computation.

Chung is an assistant professor in the Center for Neural Science at New York University and an associate research scientist and project leader at the Flatiron Institute’s newly created Center for Computational Neuroscience. Before joining the Flatiron Institute and NYU, Chung worked as a postdoctoral research scientist at Columbia University’s Center for Theoretical Neuroscience. Prior to that, she was a Fellow in Computation in the Department of Brain and Cognitive Sciences at the Massachusetts Institute of Technology. Chung received her doctorate in applied physics from Harvard University and a Bachelor of Science from Cornell University in physics and mathematics.

Chung recently spoke to the Simons Foundation about her work and the inspirations for studying the complexities of the brain. The conversation has been edited for clarity.

What is your current research focus?

My work is at the intersection of neuroscience and machine learning, which is a branch of AI. In my lab, we develop concrete measures of how neural activities efficiently encode and package information needed to perform a task. I can give a quick analogy.

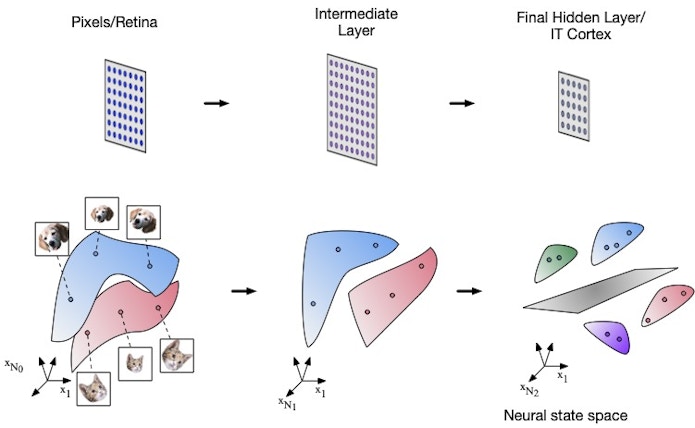

Imagine you have a finite sized box. If you try to fit large balls inside, you can only put a small number in the box. But if the balls are smaller, many more can be squeezed in. A similar principle exists with neural data. When the brain is presented with a moving stimulus – such as a video of a dog – a set of neurons responds with different firing rates. This collection of responses can be represented as a mathematical entity called an ‘object manifold’. In our analogy, an object manifold is one of the balls in our box. Our brains can juggle, or process, many of these manifolds at once. To do this, the brain makes these manifolds small and tightly packed. This makes the brain efficient at storing and reading out such information. In effect, the brain has squeezed extra balls into the box.

So, our work is particularly focused on the connection between the geometry of these manifolds and the corresponding efficiency of neural data: how these memory manifolds (the balls) are arranged to work efficiently.

Because the brain is so complex and so little is known about it, I use machine learning methods to build models of the brain and study the rules that the brain uses to learn. This approach can help make sense of neural data. Additionally, it turns out that this approach is helpful for understanding the inner workings of AI models, mainly deep networks, which are inspired by the brain.

What are deep networks?

Deep network models are arrangements of units that mimic how neurons work. These networks are given a cognitive task and are taught how to complete it — just like the brain. This is different from old systems where researchers would code artificial intelligence models to do a specific task. Deep networks are trained like our brains to perform certain tasks and they can adapt to learn new ones.

Deep networks have been around a long time, but in the mid-2010s they had a surge in popularity due to their strength in accomplishing tasks such as facial recognition and transcribing text from speech.

How can studying deep networks help our understanding of the brain, and vice versa?

Deep network models have been designed to emulate the brain. But counterintuitively, even though they are a human creation, we don’t really understand why they work as well as they do. Our work shows that you can develop computational analysis tools to understand how neurons perform highly complex tasks, like collectively encoding information when processing vision and movement.

Additionally, there are many features in the brain that are not reflected in modern deep network models. For example, biological neurons are stochastic, meaning they fire randomly. If you introduce features like stochasticity, into network models, it turns out that they make these deep network models perform much better.

This is one example where using neurobiological characteristics as the building blocks of these network models helps improve these networks’ computational capacities. And now with our new geometric analysis tools and modeling techniques, we can also investigate how these neurobiological characteristics make neural responses better suited for task performance. It’s very exciting to see these connections arise in my lab’s work.

What are you looking to study in the future?

I’m excited to continue thinking about the efficiency of neurons in performing a task, like classifying or discriminating between different objects. How do these tasks affect neural activity and firing rates? This goes back to the earlier analogy with the balls in a box. What we’re trying to discover — with mathematical theory — is how the brain packs the “memory” balls into a box, so to speak.

These theories can then also be applied to both neuroscience and AI, with the goal of making the fundamentals of computation more efficient. Since our theory tells us that smaller and low-dimensional manifolds are more efficient at packing information, maybe deep network models would also benefit from implementing such geometry. We’ve already designed network models to be more like the brain — such as the stochasticity I mentioned — which helps them more efficiently perform tasks that are commonly solved by the brain.

Thus far our work has focused on sensory areas of the brain, like visual and auditory areas, but I would love to branch out to the motor cortex and more cognitive areas, like the prefrontal cortex and hippocampus, which are known to deal with activities like spatial navigation. This would go beyond object identification to even more complex tasks, which will be crucial to understanding the computational principles underlying cognition.

How has the Flatiron Institute helped you with this work?

My training and research are interdisciplinary. Working at the Flatiron Institute, which is an internal research division of Simons Foundation, has been very helpful because there’s a network of computational scientists, not only in neuroscience but also in astrophysics, quantum physics, biology and mathematics. It’s very intellectually stimulating, and I’m constantly inspired by the work coming out from each of the institute’s centers.

Another aspect is the Foundation’s support for collaborations with other institutes. For example, I have a joint appointment at NYU’s Center for Neural Science. That has enabled crucial collaborations with experimental neuroscientists there, which is critical to the success of our lab’s work. The Flatiron Institute is the perfect place for collaboration.

This Q&A is part of Flatiron Scientist Spotlight, a series in which the institute’s early-career researchers share their latest work and contributions to their respective fields.