Cracking the Neural Code in Humans

In a sterile operating room at Massachusetts General Hospital, neurosurgeon Ziv Williams stood poised above his patient. Williams was about to implant a deep brain stimulation device, a specialized electrode designed to treat movement disorders, through a small hole drilled in the skull. But first he would perform a much more unusual procedure. The patient had agreed to be part of a study testing a device called Neuropixels, a silicon electrode array capable of tracking brain activity at unprecedented scale and resolution. Researchers would record from the patient’s brain for several minutes before moving on to the DBS procedure.

Neuropixels devices, which can track the electrical activity of thousands of individual neurons simultaneously, have had an enormous impact on the practice of neuroscience since their launch in 2018. But to date, their use has been limited to studies in rodents and monkeys. Williams, Sydney Cash, a neuroscientist and epileptologist at MGH, and collaborators are among the first to use the devices in humans, having published their results in the February issue of Nature Neuroscience. Though the study was mainly intended to demonstrate the feasibility of using Neuropixels in people, early results hint at its potential. Researchers recorded a broad range of spike waveforms, for example, some of which they haven’t seen in rodents, says Angelique Paulk, a neuroscientist at Harvard University and lead author on the study. “This opens an exciting new window into human cortical physiology and cognition,” Williams says.

Neuropixels is the latest in an evolving arsenal of tools used to study neural activity in humans, in experiments founded on a deep interplay between clinical and basic research. Given the safety risks of directly accessing the brain, neural recordings are performed only on patients undergoing neurosurgery or paralyzed patients enrolled in clinical trials of brain-computer interfaces (BCIs), such as those produced by BrainGate. The primary goal of these studies is to develop better treatments for neurological disorders. “But it’s also an unprecedented opportunity to study the human brain at a resolution we typically don’t have,” says Chethan Pandarinath, a neuroscientist at Emory University in Atlanta. Those insights then feed back into better BCIs. “There’s a crisp tie-in between cutting-edge basic science and the application.”

With advances in the last few years in both recording technology and the tools to analyze large-scale neural activity, researchers studying the human brain should soon be able to address some fundamental questions concerning neural coding and dynamics. The field has debated, for example, whether the tasks that researchers use with rodents and monkeys are sophisticated enough to capture the full repertoire of activity patterns that large neural populations can produce. The ability to study complex human activities, such as speaking and writing, offer the opportunity to explore this question in ways not possible in animals. “To push the knowledge of how populations of neurons compute, you need a simple behavior that’s rapid and highly dexterous,” says Krishna Shenoy, a neuroscientist at Stanford University and an investigator with the Simons Collaboration on the Global Brain. Shenoy’s team is studying these types of behaviors in patients with long-term neural implants and is collaborating with Cash, Williams, Paulk and others on Neuropixels recordings.

A gateway to the brain

The most common type of human recording experiment piggybacks on neurosurgical procedures, such as those for epilepsy or deep brain stimulation. But the longest-running studies come from efforts to develop assistive devices designed to help paralyzed people by translating neural activity into the user’s desired action.

At the heart of this type of BCI is an algorithm that learns the relationship between input — the neural activity produced when the user thinks about what they want to do — and output — the movement of a robotic arm or a computer cursor, for example. BCIs can employ different types of technologies for monitoring the brain, but the more precisely they can record and interpret neural activity, the more effectively they can transform those signals into action. The BrainGate study, first launched in 2004, uses the Utah array, a microelectrode array containing more than 100 wires. Utah arrays are considered the gold standard for long-term recording — they’ve been in use for more than two decades and have been used to record for six years in a single person. They record neural activity at much higher resolution than other electrode arrays commonly used in humans, which detect local field potentials rather than activity from single cells.

Shenoy and collaborator Jaimie Henderson, a neurosurgeon, joined the BrainGate study in 2009, translating decades of research on the neural coding of how monkeys reach and grasp. (For more, see “Discoveries of Rotational Dynamics Add to Puzzle of Neural Computation.”) Their work improved BrainGate users’ ability to efficiently move a computer cursor, enhancing their typing and communication capability.

Much of their work followed monkey studies from Shenoy’s lab. But three years ago, Shenoy says, he felt the research had reached an inflection point. “The time had come to do things that can only be done in humans,” he says, such as studying writing and speech. “We realized that we could responsibly and ethically explore those abilities in clinical trial participants implanted for the ‘simpler’ reach or reach-and-grasp work to learn entirely new science and then start building new BCIs.”

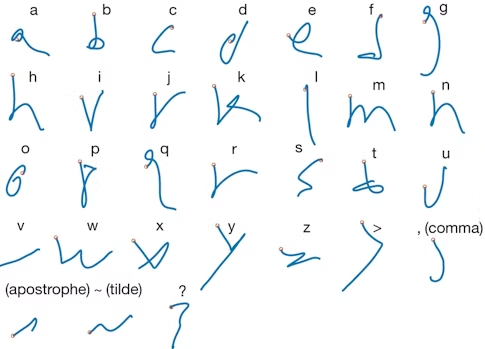

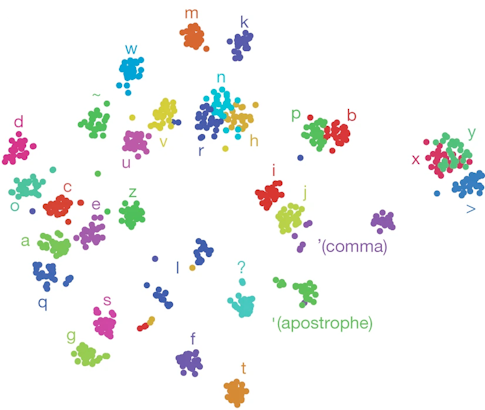

In a study published in Nature last year, Shenoy, Francis Willett and collaborators asked a BrainGate participant to imagine writing different letters while the researchers recorded activity from about 200 neurons. They decoded neural activity using a recurrent neural network, enabling the participant to produce 90 characters per minute, more than doubling the previous record for an intracortical BCI. “There is nothing like seeing the participant type faster than he had been able to communicate,” Shenoy says.

One reason the researchers were able to decode the intended letters so effectively was because the neural activity produced when someone imagines drawing a letter is more complex than the activity produced when the person imagines drawing a straight line. “Your brain is actually issuing more information per second when you handwrite than when you make a straight line,” Shenoy says. That increased complexity made it easier to quickly decode the intended letter — and offers new opportunities for exploring neural coding. Pandarinath, an investigator with the Simons-Emory International Consortium on Motor Control, and collaborators are now beginning to examine how neural population dynamics allow us to coordinate fast, fine finger movements, like the ones needed to play the piano, which are fundamentally different from large, simpler movements, such as reaching.

Because a core goal of BCIs is to help people control robotic arms or computers, BrainGate studies often focus on parts of the motor cortex involved in planning hand and arm movements. But it turns out that this brain region harbors a broader range of signals, including those related to speech, offering another opportunity for examining complex neural dynamics. In a study published in eLife in 2019, Shenoy, Sergey Stavisky, now a neuroscientist at the University of California, Davis and an SCGB investigator, and collaborators showed they could reliably decode speech-related neural activity from the hand knob area in people who can’t move their hands but can still speak. “We can observe a lot from this narrow keyhole we are looking at,” Stavisky says. “Even if we implant the array based on how to maximize BCI performance, we will be able to do interesting important basic science on top of it.”

Stavisky, Shenoy and collaborators are now embarking on a new study, funded by the SCGB, studying speech regions more directly. They will implant BrainGate devices in four speech-related parts of the brain, including Broca’s area, a region important for generating words. Researchers will be able to study the neural dynamics underlying speech and how those dynamics shift from region to region. “It will be the first multi-area work in humans at single-cell resolution with the capacity of speech,” Shenoy says. “We are really excited about that.”

New window on neural dynamics

Given its complexity, speech is an ideal substrate for studying neural dynamics. A monkey might learn to reach its arm in 100 different ways, but a person can say 10,000 words over weeks of recordings with no training, Stavisky says, opening a whole new realm of scientific questions. Researchers can explore how the brain encodes this vast space of behavior and examine what neural activity looks like as people prepare to speak. “Speech is the signature human ability,” Shenoy says. “We can speak up to 150 words a minute — it is the most rapid thing we can do.” He predicts that the ability to study the neural activity underlying speech will make it a “big workhorse engine for the whole community,” akin to the current study of decision-making.

The ability to study more complex tasks, such as speech and writing, in humans offers the opportunity to dig into some deep questions that have emerged from animal studies of neural dynamics — most notably, whether the relative simplicity of the behavioral tasks used in animal research has constrained scientists’ ability to explore the brain’s full dynamical repertoire. “It could be that to really understand the system, we need to increase complexity dramatically,” Pandarinath says.

One of the questions that Shenoy, Stavisky, Pandarinath and collaborators are interested in exploring is the types of dynamics that emerge during sophisticated tasks. Will the same neural motifs drive a simple reach of the arm and the complex finger movements required to play a concerto? “Or does the system use different machinery for different tasks, with one set of dynamics for grasping and another for fine finger movements?” Pandarinath asks. Researchers are starting to explore this in monkeys, but human studies would push this further, he says.

One way to analyze neural dynamics is to view them as low-dimensional processes embedded within a higher-dimensional space. The activity of a population of 100 neurons can technically lie in a 100-dimensional space. But because neurons aren’t all independent, their activity patterns are limited to a subspace or manifold within that higher-dimensional space. Somewhat surprisingly, studies to date suggest that neural activity, even for large populations of cells, seems to be limited to a relatively small number of dimensions, roughly 10 to 20. Neuroscientists have debated whether this reflects the true nature of the brain’s activity or the constrained tasks researchers use to probe it. “It could be that concise and low-dimensional descriptions of activity are largely a product of how we study the neural circuit,” Pandarinath says. “These simplified descriptions have been useful for understanding the dynamics of simple movements, but it would be strange to have 10 million neurons to control your arm and only see 20 dimensions of co-activation over and over again when the circuit has the capacity to do much more.”

Studies of speech and fine motor control could provide new insight into this question. Though they don’t yet have results from their speech studies, Stavisky anticipates that the neural dynamics underlying speech will be higher-dimensional than those associated with simple movement. Producing speech requires many muscles and is much more complex than reaching an arm. “There are things like context and emotional valence associated with speech but not movement that will add another layer of complexity,” he says. Indeed, such studies may reveal entirely new types of dynamics. “My guess is it will,” Shenoy says. “We have never pushed a system so hard.”

Studies like these rely on new analysis methods that make it possible to decode information from single trials, rather than from averaging neural activity, as is often done in monkey studies. “As the behavior becomes more complex, the space of possible movements is huge — thousands of possible words,” Stavisky says. “If we want to understand how the brain generates this rich repertoire, we want single-trial analyses so we can sample more behavioral conditions like different words.” Moreover, because the exact dynamics of the vocal tract differ even when someone is saying the same word over and over, we want to be able to relate neural activity to behavior on a single-trial basis, he says.

New technologies, such as Neuropixels, that boost recording capacity from hundreds to potentially thousands of neurons, will help in that regard. Recording from a greater number of neurons enables researchers to examine dynamics at finer and finer timescales, Shenoy says. “Neurons are noisy; the more you have at the same time, the better you can estimate the internal neural state.” Shenoy and Stavisky are collaborating with the group doing human Neuropixels recordings, though for now the efforts are limited to short-term recordings during procedures — minutes rather than the weeks or months of the BrainGate studies.

From hundreds of neurons to thousands

To use Neuropixels in humans, even for short stints, Paulk and collaborators had to surmount a number of technical challenges. They had to develop thicker probes to insert into the human cortex and develop ways to correct for motion artifacts of the pulsating cortex, an issue that is much less problematic in smaller rodent brains. They also had to contend with the electrically noisy environment of the operating room, which can interfere with electrophysiology recordings. (For more on Neuropixels, see “A New Era in Neural Recording.”)

Researchers have made their data and code public, so that others interested in using Neuropixels in humans can benefit from the tools they developed, and so that researchers studying other animals can start to make comparisons. “These are challenging cases, done in unique and rare settings; we want to share the knowledge,” Williams says. “With this dataset, people can start looking for differences in how neurons process information or communicate with other models.” (Another study using Neuropixels in humans was posted on the bioRxiv in December.)

Though the main goal of Paulk’s study was to demonstrate how to use Neuropixels in humans, the results hint at a broad potential for future experiments. Neuropixels recording sites are closely spaced, just a few micrometers apart, which permits researchers to better characterize neurons. “You can get a spatial and temporal fingerprint for each putative neuron, which lets you dissect out the different neurons that are active in the vicinity and gives a much finer-scale snapshot of neural activity,” Williams says.

Analyzing spike shapes, or waveforms, can help researchers identify cell types, such as whether a cell is excitatory or inhibitory, and how it’s interacting with other cells, Paulk says. For Paulk, the most surprising finding so far is the sheer diversity of the waveforms they recorded — in shape, size and spread — including waveforms that have not been described in mice. Cash’s team is collaborating with Shenoy’s team to pool large Neuropixels datasets and to figure out how monkey and mouse research can inform human studies.

Researchers are particularly excited by the prospect of recording from multiple layers in the cortex, which connect to distinct regions. “Because with these electrodes we can record from large local populations simultaneously, we can start to ask questions about how [cells in different layers] communicate with each other, how they transmit information and how this relates to complex cognitive processes,” Williams says. With data from three people so far, researchers see differences in the distribution of neurons in different layers, though he notes it’s too early to draw specific conclusions.

Stavisky’s and Cash’s teams are planning speech studies using Neuropixels. Because patients are undergoing procedures targeting different brain regions, researchers can look at the role of speech more broadly. “You can have every person say the same set of words and sample from many more parts of the brain and then look at how multiple areas are involved in the relative timing of speech,” Stavisky says. “These are the kinds of questions we can ask with Neuropixels.”

Researchers eventually hope to do chronic recordings with Neuropixels but must first surmount a number of hurdles. “While such capability would have enormous potential, there remain significant engineering challenges such as how to anchor or stabilize the device,” Williams says. “We would also need to develop techniques such as wireless neuronal recordings to enable the free transmission of information outside the brain.”

Neuropixels isn’t the only high-resolution recording technology on the horizon. Companies such as Neuralink and Paradromics are also developing commercial devices for large-scale, long-term recording in humans. Neuralink, for example, has developed flexible electrode technology and a low-power wireless device for processing and transmitting neural signals, currently being tested in monkeys. (For more on alternative recording technologies, see “A New Era in Neural Recording Part 2: A Flexible Solution.”) Commercial investment in this technology could have major implications for both patients and scientists. In addition to making these technologies broadly accessible to the patients who need them, says Shenoy, a co-founder of Neuralink, “it frees up university investigators to do more advanced basic research, because they don’t have to do so much work to make the case that these things can help people someday.”