Computers Have Memories Too

As a teenager, Sebastian Wolff fancied becoming an architect, only to turn to computer science when he discovered a talent for coding. He entered college expecting to become a computer programmer in a corporate IT division. While he was drawn to the challenge of creating elegant computer code, Wolff’s interests pivoted once again, this time to the theoretical foundations of computer science.

Wolff earned his doctorate at the Technical University of Braunschweig in Germany and is now a postdoc at New York University’s Courant Institute under the supervision of Thomas Wies. A first-year Junior Fellow with the Simons Society of Fellows, Wolff uses theory to solve practical computer problems caused by the advent of concurrent computer systems, in which a computer or set of computers works on many tasks at the same time.

Wolff and I recently discussed his work. Our conversation has been edited for clarity.

What are ‘concurrent’ computer systems?

In the early days of computer programming everything was sequential: One task finished before the next task began. But today, things are more complicated. Computers are ‘concurrent,’ meaning that they can perform many tasks simultaneously. This shift occurred in part because we reached the speed limits of sequential computing. But concurrency has also come about naturally in response to the steady increase in globally available web services. For example, people want every website they ever visit to load instantly and without any errors. This can’t happen without concurrency.

Concurrent computing can be performed on a single server, or many servers linked together. Let’s say that you and I both want to buy the same item on Amazon and there’s only one of them left in the world. I might be searching on a server in Europe while you put the same item in your cart on a U.S. server. Maybe I placed my order minutes before you did, but the item is in a U.S. warehouse and so could be shipped to you faster than it could be sent to me, at less cost to Amazon.

Do I get the item on a first-come, first-served principle, or do you get it based on logistical ease? Does Amazon sell the same thing to us both and issue a refund to one of us, or does it refuse to sell to either of us and hope only one person looks for it later? Each of these options are decisions embedded in computer code. The complexity arises because these decisions are all being made concurrently. In the old sequential world, my order would have prevailed without any question because I placed it first. End of story. But that’s almost never the case today.

In the situation you just described it seems ideal for the program to only allow one item to be sold if only one item is available — regardless of its location. How does computer theory come into this?

As you can see, decision-making in concurrent programs is quite involved. This is reflected in the code, which is complicated as well. Indeed, even the best programmers can make mistakes in the code they write. And those mistakes lead to undesired and unexpected behavior, like selling one item twice.

Computer theory — or formal methods, as we like to call it — provides the machinery for checking the code you’ve written and enabling you to see if your program operates as expected. What sparks my interest is that formal methods can be applied to any potential code or program. With these infinite possibilities, the challenge that then arises is to come up with useful theoretical machinery. In my work, my collaborators and I usually start by studying how programmers write code, distill from that the underlying principles, and then turn those principles into a theory.

How do you use theory to test whether a code works?

There are two aspects to this: testing a code’s output in different conditions, and then verifying that code under all possible conditions.

Testing is exactly what it sounds like: We run the code against a bunch of different scenarios and see if it acts as expected. In our shopping example, we could input different items and make sure that only those that exist in the warehouse are sold. It might be possible to get tests that show with 99% certainty that such a code will work, although that is quite unlikely for concurrent systems. In theory, testing can never result in 100% certainty because there’s always one more parameter that you could test. The possibilities are endless. Ensuring that the code underlying a program always works is where verification comes in.

Tell me more about verification.

Verification comes in three categories. Manual verification is a pencil-and-paper mathematical proof that a given piece of code will always work if certain conditions apply. This is the easiest type of verification to create and understand, but there’s always a risk that a wrong assumption will mean that the proof is invalid. Because people have published manual verification proofs that later turned out to be wrong, this approach isn’t as popular as it used to be.

Mechanized verification takes the human-generated manual verification and then runs a ‘proof assistant,’ essentially a computer program that checks the validity of the proof. This is more reliable than manual verification, but still requires a lot of upfront work to input the parameters that the mechanized verification tool attempts to validate.

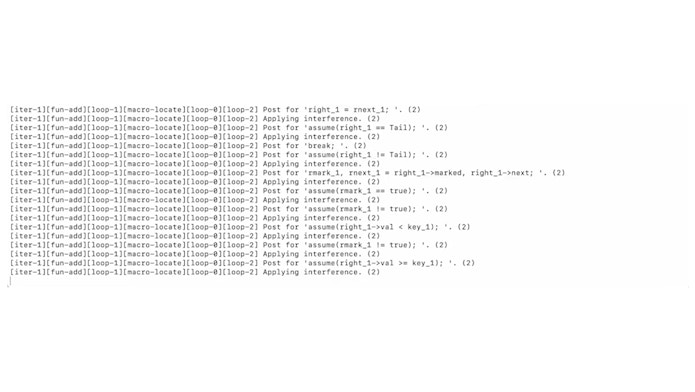

That brings us to automated verification, which is my particular interest and the focus of my doctoral work. This type of verification involves writing an algorithm to produce a proof of whether a program will always work. It’s advantageous because it doesn’t involve such a time commitment up front, while also being accessible to nonexperts.

What did you verify during your doctoral work?

A key foundation to my research was the concept of a data structure. Broadly speaking, a data structure maintains a collection of objects that are linked to each other, with rules governing the relationships between those objects. The collection of words in a dictionary is a data structure, for example, as is the collection of products in an online marketplace.

Something I became interested in during graduate school was memory reclamation, or what happens to items that are removed from a data structure and no longer used. For example, what should happen to a webpage for a concert for which ticket sales have closed? Do you leave the ticket site up forever, long after the concert happened? Do you completely delete the page? If so, when do you delete it?

Many data structures would leave the item as is and periodically scan through all items to delete those that have no more incoming links. This takes time and resources. Manually deleting items is sometimes preferred, but also imperfect; it is incredibly difficult to do in such a way that the deleted items are not accessed again.

In my research, I proposed a set of conditions on data structures that guarantee that the use of the aforementioned manual deletion and periodic scan-and-delete processes are indistinguishable. This approach reduces system crashes by preventing access to deleted items. More importantly, abandoning the manual deletion process during verification also makes the entire process much simpler. We were the first group to propose automated verification for these types of data structures. Programmers can use our work in exactly this way, making the switch between the two deletion processes easier.

By clicking to watch this video, you agree to our privacy policy.

How exciting! What are you working on now?

My doctoral work focused on solving the challenges within one class of data structures. While our theory was general, for our practical results we had to restrict one aspect of data structures: how items are linked together. During my postdoctoral appointment at NYU, I want to build on the principles from that experience to automate solutions to similar challenges facing a much larger set of data structures.

Finally, what are your thoughts about the Simons fellowship?

In computer science, output can come in waves: Sometimes nothing happens, sometimes everything happens at once. So, the three years of unrestricted support from the Simons Foundation is truly a blessing. This Junior Fellowship is giving me the resources and time to follow my research path wherever it leads.