CCA Colloquium: David Schwab

Title: Entropic forces from noisy training in overparameterized neural networks

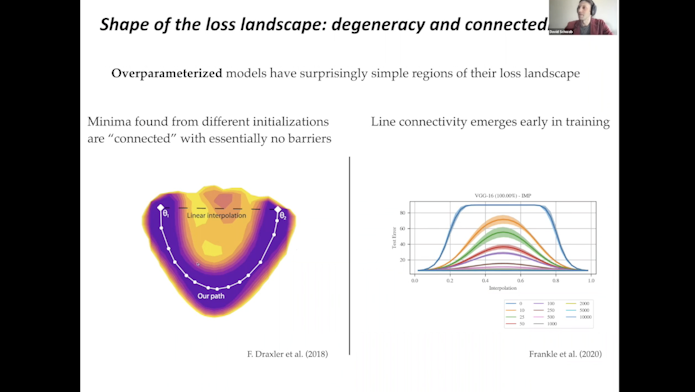

Abstract: Stochastic gradient descent (SGD) forms the core optimization method for deep neural networks. While some theoretical progress has been made, it still remains unclear why SGD leads the learning dynamics in overparameterized networks to solutions that generalize well. Here we show that for overparameterized networks with a degenerate valley in their loss landscape, SGD on average decreases the trace of the Hessian of the loss. We also generalize this result to other noise structures and show that isotropic noise in the non-degenerate subspace of the Hessian decreases its determinant. Next, we turn to the question of characterizing and finding optimal representations for supervised learning. Traditionally, this question has been tackled using the Information Bottleneck, which compresses the inputs while retaining information about the targets, in a decoder-agnostic fashion. We propose the Decodable Information Bottleneck (DIB) that considers information retention and compression from the perspective of the desired predictive family. Empirically, we show that the framework can be used to enforce a small generalization gap on downstream classifiers and to predict the generalization ability of neural networks.

March 19, 2021

David Schwab: Entropic Forces From Noisy Training

By clicking to watch this video, you agree to our privacy policy.