Dopamine: Beyond the Rush of a Reward

If you put enough money into a vending machine to buy one candy bar and the machine accidentally gives you two, the unexpected reward triggers a burst of dopamine in your brain. This is a classic example of learning through reinforcement; you’ll probably go back to that vending machine, hoping to repeat the windfall. Dopamine serves as a teaching signal, tracking the difference between a predicted and an actual reward — a discrepancy known in the field as reward prediction error — reinforcing behaviors thought to yield a future reward.

“The canonical view is that dopamine neurons send reward prediction error signals to teach other areas how to memorize the value of different things,” says Nao Uchida, a neuroscientist at Harvard University and an investigator with the Simons Collaboration on the Global Brain.

The reward prediction error model has a lot going for it. Besides being simple, it’s supported by extensive experimental and theoretical evidence. Dopamine neurons become active when animals get an unexpected reward. And activating dopamine neurons in response to a certain behavior mimics the effect of an unexpected reward, positively reinforcing that behavior. Conversely, inhibiting dopamine neurons prevents animals from learning these associations. “That’s nicely aligned with it being a teaching signal,” says Ilana Witten, a neuroscientist at Princeton University and an investigator with the SCGB.

But growing evidence from Witten, Uchida and others suggests that not all dopamine neurons fit this picture. Some dopamine neurons respond to movement, novelty or even aversive stimuli, activity that isn’t easily explained by the reward prediction error model. “The idea that dopamine is simply a signal of reward prediction error is worth revisiting,” says Adrienne Fairhall, a theoretical neuroscientist at the University of Washington and an investigator with the SCGB. “The story is more complicated.”

Diversifying dopamine

Much of the new information on dopamine relies on researchers’ relatively newfound ability to study different subpopulations of dopamine neurons independently. Their work has revealed that different cells can play different roles.

In research published in 2016, Witten’s team trained mice to perform a slot-machine-like task, where animals pulled one of two levers to get a reward. The reward lever randomly switched over time, creating lots of reward prediction errors. Researchers recorded dopamine activity in two brain regions, the nucleus accumbens and the dorsomedial striatum. By comparing rewarded and non-rewarded trials, the researchers could separate neural activity related to the movement from activity related to the reward itself. The findings confirmed that some dopamine neurons that project to both areas did indeed encode reward prediction errors. But the team found that dopamine activity within the dorsomedial striatum also carried information about the animal’s future action.

In a new set of experiments, the researchers trained animals to navigate a virtual T-maze with a series of visual cues on either side. The animal had to keep track of how many cues appear on each side and turn in the direction that has more. (This is known as an evidence accumulation task.) Researchers used an implanted lens and two-photon calcium imaging to track dopamine activity in a part of the brain called the ventral tegmental area, which houses dopamine neurons that project to the nucleus accumbens and dorsal striatum.

As in the previous study, most dopamine neurons in this region responded to an unexpected reward. But some represented other factors not directly linked to the current reward, such as the animal’s position in the maze, its speed or acceleration, or whether a particular choice had previously been rewarded. “There’s a huge variety of neurons,” Witten says.

The different neurons seem to inhabit different parts of the ventral tegmental area. Neurons that represent accuracy are generally more medial, and neurons that represent kinematic variables are more lateral, Witten says. “There are different types of dopamine neurons, carrying different molecular markers, which have different functions.”

By clicking to watch this video, you agree to our privacy policy.

Researchers don’t yet know exactly what the results mean for the reward prediction error model of dopamine. Dopamine clearly represents information beyond the reward, but it’s possible that non-reward signals could still represent some kind of prediction error, such as whether an action was predicted. “I personally doubt that will be the entire story, though it might be part or a lot of it,” Witten says.

To find answers, scientists will need to determine the extent to which dopamine supports ongoing performance as opposed to learning, which influences future performance. In the vending machine example, the burst of dopamine that occurs right after you get the candy drives learning: It reinforces the previous action — going to the vending machine — motivating you to return to the vending machine the next day. A burst of dopamine that occurs when you’re sitting in your office, by contrast, drives performance by motivating you to get up and go to the vending machine.

“An error signal is something that should drive learning but not necessarily performance,” Witten says. “Does manipulating dopamine neurons affect ongoing behavior or just learning? The jury is still out.”

A novel threat

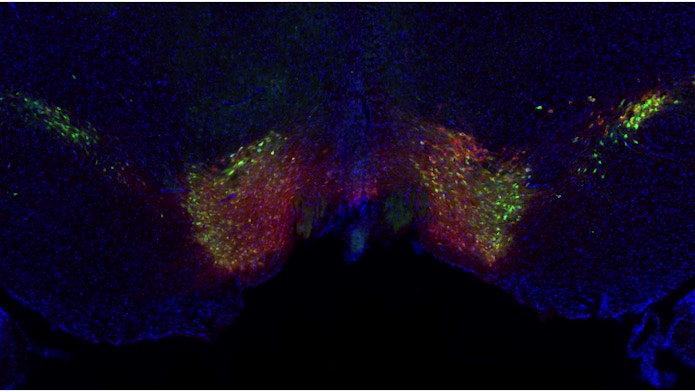

Uchida has also found that different dopamine neurons perform different functions. Dopamine neurons in the ventral tegmental area and the substantia nigra project to multiple areas, with the densest projections going to the striatum. These neurons target different regions of the striatum, including the ventral striatum, the dorsal medial striatum, the dorsal lateral striatum, and the tail of the striatum.

To understand how different regions of the striatum behave, Uchida and collaborators use an optical fiber inserted into the target region to record calcium activity in dopamine neurons’ axonal projections.

“Neurons that project to the ventral striatum, also known as the nucleus accumbens, are the canonical reward prediction error type,” Uchida says. Scientists have measured these neurons in many different contexts. But Uchida’s team has shown that some neurons that project to the tail of the striatum behave differently, responding to both aversive and novel stimuli. “This is one answer to the controversy,” Uchida says. “Different signals exist but in different regions.”

In a study published in Nature Neuroscience in September 2018, Mitsuko Watabe-Uchida, a research fellow in Uchida’s lab, and collaborators further explored the role of dopamine neurons that project to this region. They tracked the response of those neurons to a range of stimuli, including sounds of varying intensity, air puffs and bitter-tasting chemicals. By testing several types of stimuli, the researchers determined that the cells were responding to both novelty and some (but not all) negative events — an air puff or a loud sound but not a bitter taste, for example.

The researchers found that stimulating dopamine neurons in the tail of the striatum is aversive — animals will avoid an action linked to stimulation of these neurons, highlighting just how different they are from canonical dopamine neurons, Uchida says.

Ablating dopamine neurons that project to the tail of the striatum blocked the animals’ ability to learn to link an action with a negative consequence. Animals still retreated from threatening stimuli, such as a novel object, when they first encountered it. But on subsequent trials, they stopped being afraid of the stimuli much more quickly than animals whose neurons were intact. The results suggest that this subgroup of dopamine neurons reinforce an animal’s choice to avoid a negative stimulus.

Uchida theorizes that these neurons are involved in something similar to reward prediction error — namely, threat prediction error. “The same algorithm might be at play using different signals,” he says. Rather than calculating the error between a predicted and an actual reward, these neurons may calculate the error between a predicted and an actual threat.

Signal timing

The evidence for dopamine’s wider role in brain function extends beyond rodents. The fruit fly brain, which houses roughly 600 dopamine neurons, provides a simple substrate for dissecting the chemical’s role in learning. A well-mapped structure known as the mushroom body mediates olfactory learning, which enables flies to associate a particular smell with a reward or punishment. Kenyon cells relay odor information to mushroom body output neurons (MBONs), which are organized into compartments. Each compartment also gets input from a unique population of dopamine neurons. These dopamine neurons can respond to positive and negative reinforcement, as well as positive cues, such as a sugar treat, and more aversive cues, such as a mild shock.

Releasing dopamine into a specific compartment changes the synaptic connections between the Kenyon cells and the MBONs, triggering learning. Simply activating different dopamine neurons in the presence of an odor is sufficient to teach flies to like or avoid it.

Vanessa Ruta, a neuroscientist at Rockefeller University and an investigator with the SCGB, has found that dopamine neurons targeting the mushroom body not only respond to positive or negative stimuli but also provide an ongoing record of what the animal is doing. Ruta’s team images dopamine neurons while flies actively track an odor, allowing the researchers to correlate neuron activity and behavior. They showed that the same dopamine neurons that are involved in olfactory learning can carry information about the animal’s movement. Some are active when the animal begins to move, others when the animal is speeding up or turning toward an odor. “Our work begins to suggest that these neurons don’t just simply reflect sensory cues or motor actions but the behavioral context or motivation underlying these actions,” Ruta says.

This discovery was particularly interesting to Fairhall, who had been studying dopamine’s role in birdsong. Fairhall analyzed data from Ruta’s lab, using linear convolution models to systematically relate dopamine signals to different variables. She found that dopamine encodes not just whether a particular smell is tied to a reward or punishment but a variety of complex factors. Different compartments seem to represent different properties. For example, dopamine activity in one compartment correlates with whether the animal is walking or not, irrespective of reward. Dopamine activity in another compartment contains information about the odor, the animal’s walking speed, which way it’s heading when it encounters the odor, and the direction in which the wind is blowing the odor.

The findings challenge the notion of dopamine as a simple reinforcement signal. “Recording dopamine signals under natural conditions reveals they carry rich information content, much richer than a binary reward signal,” Fairhall says. The fact that these neurons appear to integrate different types of variables, including odor identity, reward context, self-motion and external variables like wind speed, suggests that they are performing more complex computations than simply the difference between a predicted and an actual reward.

“We need to update current learning models based on dopamine signals,” Fairhall says. “The big question for us that remains is how to extend the model of the learning process. What kind of algorithm is at play when the animal is not simply getting a reward prediction, when it incorporates other aspects of behavior? How should we think about other information that’s being represented?”

Researchers have gained their deeper understanding of dopamine with the aid of new technology that enables them to visualize specific dopamine inputs to the mushroom body while flies behave in a natural way — moving freely as they track an odor. “Having animals doing something more naturalistic while tracking dopamine signals is relatively new,” Fairhall says. “Now we can look at specific inputs of specific neurons and interpret their response in terms of the complicated activities that the animal is doing.”

Ruta and Fairhall now plan to explore how dopamine cells multiplex signals. They will examine the interaction between motor control and reinforcement learning and try to understand why learning signals and signals about the animal’s ongoing behavior might be combined in single cells.

Ruta has also found that the timing of the dopamine signal is vitally important in influencing behavior — the same signal can make an odor seem appealing or indifferent depending on whether it’s delivered before or after the odor. In these experiments, researchers activated dopamine cells as flies walked on a treadmill-like ball. Animals tend to walk toward odors with positive associations, such as those linked to a sugar reward, but not those with negative ones, such as those linked to a shock. Presenting an odor first and then triggering dopamine release from neurons that respond to rewards made the odor more enticing — flies moved toward it faster. Triggering the same dopamine neurons before presenting the odor decreased the animal’s approach, suggesting that the animal was no longer interested in that smell. “Together, these results suggest that animals use the relative timing of events to learn the causal relationship between events in their environment,” Ruta says.

Ruta proposes that the same signal can induce opposite effects because of opposing forms of plasticity. Cells in the mushroom body have two types of dopamine receptors, each of which has a distinct downstream signaling cascade. Activating dopamine cells before or after the odor generates distinct patterns of intracellular signaling. In her current SCGB project, Ruta and collaborators are studying how the animal’s internal state, such as whether it’s hungry, influences how dopamine represents these different variables and how this influences behavior.