Taming the Data from Freely Moving Animals

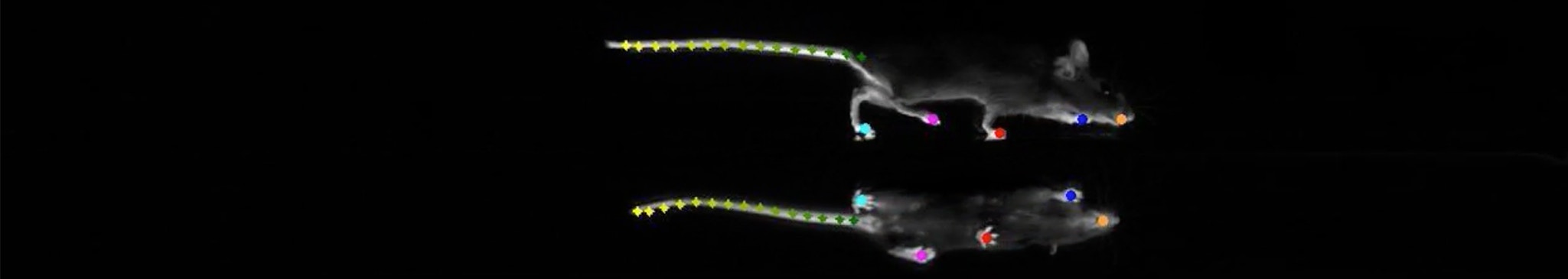

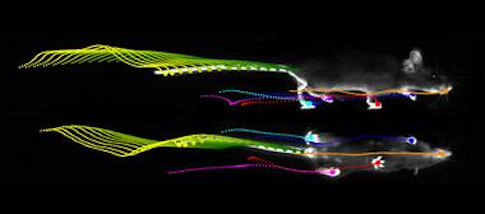

In London, on December 1, 2017, Megan Carey of Lisbon’s Champalimaud Centre for the Unknown, described to a packed room the ‘LocoMouse’ system her lab had developed to precisely track a mouse’s four paws, tail and nose as it walked.

The talk was part of a symposium entitled “The Role of Naturalistic Behavior in Neuroscience.” People were excited about how neuroscience might incorporate behaviors that animals had actually evolved to engage in. Carey was studying walking and the cerebellum — a seemingly simple behavior and a brain region known for more than a century to coordinate movement. Unsurprisingly, mice without cerebellums stumbled along slowly.

But digging into a mass of data about multiple moving body parts revealed that many assumptions people held about the cerebellum were wrong. When corrected for the smaller size and slower speed of cerebellum-less animals, common readouts, such as stride length and cadence, were completely normal. Only a fraction of the many parameters captured by the LocoMouse system were actually off. Most strikingly, without a cerebellum, a mouse’s tail and body passively swayed in response to its limbs’ movements.

From this dataset, Carey deduced that the cerebellum sends signals that make a mouse’s body predict and cancel out its own passive reaction to walking. Simply looking very closely at a familiar behavior — walking — and measuring it in new ways clarified what the cerebellum does during murine locomotion.

Two and a half years on, systems such as Carey’s, which use computer vision and machine learning techniques to record and analyze freely moving animals, have entered mainstream neuroscience. The hope is that novel, richer descriptions of behavior that don’t rely on human categorization will enable new insights into brain function.

A “call to action” review paper, published in Neuron in 2019 and inspired by a workshop, “Unbiased Quantitative Analysis of Behavior,” sponsored by the Simons Collaboration on the Global Brain (SCGB), outlines the challenges and opportunities the nascent field of computational neuroethology faces. Most pressingly, researchers are grappling with how best to use the data produced by high-resolution automated systems. Big behavioral data are complex, and how they’re generated, analyzed and

used to probe neural activity shapes the conclusions drawn about how the brain operates.

“I’m a little bit worried that people think if they just measure behavior, they’ll have the answer to their question,” Carey says in her Lisbon office. “Now it’s gotten easy to get high-dimensional representations of behavior. But it’s always going to be a challenge to extract meaningful conceptual insights from that.”

Natural behaviors

A major advantage of automated tracking is the ability to study naturally occurring behaviors rather than the simple, restricted tasks that have been a mainstay of behavioral neuroscience. Tightly controlled behavior is more experimentally tractable — scientists can more easily relate ongoing changes in neural activity to an animal’s actions, for example — but it provides a limited view of the brain. “We have learned a ton about brain mechanisms and computations from reductionist tasks. But brains were not actually built by evolution to solve these kinds of tasks,“ says Bob Datta, a neurobiologist at Harvard University and an investigator with the SCGB. “They were built to holistically interact with the world around the animal.”

Simple tasks, in which animals sequentially perceive, cogitate, then act, miss the complex interplay between perception, cognition and action that defines natural behavior. “The way that animals sample their environments, the way they understand the world, is through behavior,” Datta says. “And that deeply influences patterns of activity in neural circuits.”

Studying brain activity in unrestrained animals is challenging because of the uncertainty regarding exactly what they’re doing at any specific moment. By providing a moment-by-moment record of behavior, tracking technologies create a framework for relating natural behaviors to the neurobiology mediating them.

Tracking systems solve this with technological advances, including both classical machine learning and newer advances in artificial intelligence. Using deep neural networks (DNNs) to run complex tracking algorithms, for example, enables researchers to label massive data arrays and track an animal’s posture as it moves about more complex environments.

The algorithms themselves come in two basic flavors — supervised, where the algorithm is told what to look for, and unsupervised, where algorithms search for statistical regularities in data without any prior indication of what these might be. Developers can specify aspects of an unsupervised algorithm’s structure in order to better understand its outputs.

Supervised algorithms are fed reams of training data — examples of what they must learn to recognize and label accordingly. In classic computer vision, that might be images of the number 3. In computational ethology, it could be videos of fruit flies sleeping. Critically, the training data are first categorized by humans.

Kristin Branson, a computer scientist at the Howard Hughes Medical Institute’s Janelia Research Campus and a pioneer of this approach, says a major benefit of supervised learning is the ability to quantitatively evaluate how well your algorithm works. “For people in machine learning, it’s much easier to get into the problem if you know what the answer is,” she says.

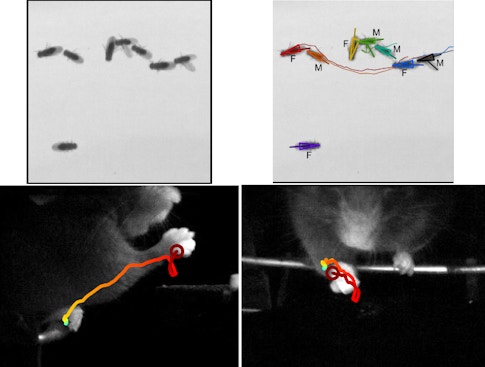

Supervised algorithms permit experiments on previously unimaginable scales. For example, Branson published a study in 2017 that analyzed videos of 400,000 flies to assess how optogenetically stimulating 2,204 different sets of genetically defined neurons affected Drosophila aggression, courtship, locomotion, sensory processing and sleep. (For more, see “New Dataset Explores Neuronal Basis of Behavior.”)

MacKenzie Mathis, at the École polytechnique fédérale de Lausanne, and colleagues at have used DNNs to develop DeepLabCut, which works across species and requires only relatively little training data. This toolbox is now open source, adding to several off-the-shelf supervised algorithms available for analyzing behavior, including DeeperCut, Stacked Hourglass, OpenPose, UNet Network (the system that Branson’s lab works with) and various others.

For interpreting in vivo recordings of neural activity, this type of behavioral monitoring provides a vital service in simply time-stamping labeled behaviors. “The importance of the labels is to be able to look into the brain and to know when a behavior begins and ends, to understand the sequence of behavior, and to ask how the temporal organization of behavior is reflected in the temporal organization of neural activity,” Datta says.

Supervised algorithms excel at tracking well-defined behaviors. But for behaviors not easily detected or defined by human observers, researchers are increasingly turning to unsupervised machine learning.

By clicking to watch this video, you agree to our privacy policy.

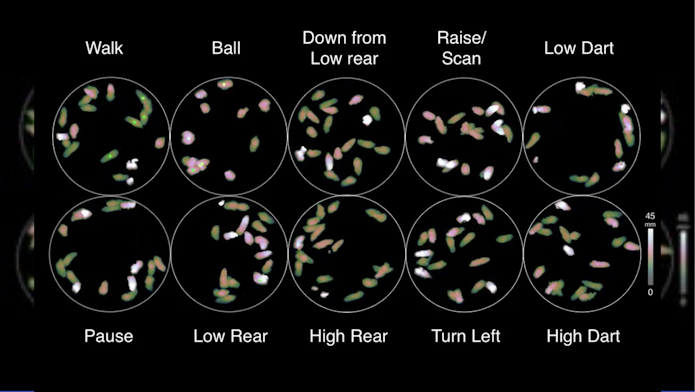

Datta’s lab developed an unsupervised system named Motion Sequencing, or MoSeq, which analyzes the three-dimensional pose dynamics of mice over time. It indicated that mouse behavior is composed of roughly 300-millisecond chunks, which the lab termed syllables of behavior. MoSeq identified approximately 30 distinct syllables when used on mice in an open arena, but Datta says that more are found when changes are made to the contexts in which mice are analyzed. (For more, see “Decoding Body Language Reveals How the Brain Organizes Behavior.”)

The organizing hypothesis that emerged from identifying syllables was that brains generate larger-scale behaviors by chaining together syllables. That is, behavior has a grammar. Ongoing work by Datta’s lab seeks to describe that grammar, mapping transitions between different syllables. Others are exploring a similar framework in C. elegans.

Evaluating algorithms

DNNs are powerful tools. They can uncover the latent structure in data and predict future behavior. But these algorithms are famously impenetrable, meaning that users typically can’t articulate what the DNN does. While the performance of supervised algorithms using DNNs can be easily evaluated, scientists still need to build a conceptual framework for unsupervised ones that describes, in a more understandable way, how the behavioral data have been classified and what that means in terms of behavioral organization and function.

Researchers using unsupervised algorithms can start to address this problem by constraining an algorithm’s structure so they can better understand its output. These constraints make the algorithm less mathematically powerful and decrease predictive power. But the added structure makes it easier for experimenters to understand the algorithm’s output.

“This is a matter of taste — interpretability versus predictability,” Datta says. “The ways in which you use mathematical tools to characterize behavioral and neural data reflect your biases as to what you’re interested in.” For example, Datta’s lab uses less structured algorithms for real-time optogenetic interventions in behaving mice, where optimal predictability is prized. But MoSeq was designed primarily to give an interpretable output.

“We like the conceptual granularity that a syllable provides,” Datta says. “It’s a thing you can look at, you can describe in words — and a unit of behavior that seems meaningful to the animal in the sense that you can easily imagine that the animal’s own brain is interpreting the syllable.”

Nevertheless, the decision to constrain a system and the need to interpret its outputs when using unsupervised techniques imposes a greater burden of proof. “You want it to show you something you didn’t expect,” Branson says. “But you don’t know if the thing that it shows you is a real thing, or just some kind of statistical anomaly.” It’s important, she says, that neuroscientists understand the basics of machine learning when using such technologies.

One way to assess unsupervised algorithms is to consider how closely automatically defined behaviors correlate with what the brain is doing. To confirm that MoSeq’s syllables were meaningful, for example, Datta’s group sought links between syllables and neuronal activity. “Failing to do so would have meant that maybe there’s something about the way we were defining behavior that wasn’t entirely accurate or reflecting how the brain controls behavior,” Datta says.

Simultaneous imaging of both behavior and neural activity in the striatum. Credit: Datta lab.

The group first examined activity in the striatum, finding that neuronal firing there corresponded to the behavioral organization MoSeq had identified. Lesioning the striatum changed how animals organized the syllables — the grammar — and not the syllables themselves, confirming that this activity was responsible for the observed behavioral structure and indicating a conceptual framework with which to interrogate striatal function.

Another example of how automated behavioral analysis can lead to insights into the organization of neural computations comes from Adam Calhoun, a postdoctoral fellow in Mala Murthy’s lab at Princeton University and a fellow with the SCGB. Calhoun examines how internal states affect fruit fly decision-making. Studying male flies during courtship, Calhoun combined behavioral data with data about sensory inputs from females. Then he asked if males use different strategies and make different choices at different times and in different contexts. Using an unsupervised framework with interpretable structure, Calhoun found that the best way to predict a male fly’s song choice was to attribute its behavioral choices to three different internal states. To support this model and tie it to a neural substrate, Calhoun and collaborators identified neurons whose activity controlled switching between states and thereby changed the ways in which sensory inputs led to behavioral outputs. (For more, see “How Fruit Flies Woo a Mate.”)

A newer option is to analyze concurrent behavioral and neural data together from the start, rather than computing behavioral data alone. Datta’s group is working with Scott Linderman, a neuroscientist at at Stanford University and an SCGB investigator, to explore this type of algorithm. They hope that combining data this way will permit neural data to influence the structure found in behavioral data and vice versa.

Beyond the algorithm

How exactly machine learning algorithms define behavior — how behaviors are broken down or built up — will critically influence how researchers interpret their results. MoSeq, for example, excels at detecting syllables, which are relatively stereotyped and recurrent. But it struggles with longer time scales, where, Branson says, “behavior will be less stereotyped [and] patterns will be much more subtle.” Every extra time point added to a behavioral sequence increases its dimensionality. “From an algorithmic point of view, finding patterns across a long time scale is really hard,” she says.

Datta says he often discusses this matter with Gordon Berman, a physicist at Emory University who works on ways to characterize behaviors over longer time frames. Datta’s lab is exploring hierarchical classification systems that incorporate multiple time frames. This remains exploratory at present, and the computing power required is huge.

The context in which a behavior occurs can also influence how it’s interpreted. The same syllable used in different contexts, for example, will likely be associated with different patterns of neural activity in different brain regions. Different internal states — such as hunger or the desire to mate — will also give rise to distinct patterns of neural activity and will differentially affect behavior in different contexts.

Calhoun gives the simple example of an animal walking toward a meal or away from danger — the underlying activity might be similar in certain neurons (in the spinal cord, say) but fundamentally different in different brain regions. Calhoun’s research seeks to contextualize behavior by, for example, incorporating data about the sensory inputs that tracked animals receive. “If you don’t know the context, you don’t know what the neurons are doing or why their responses are the way they are,” he says.

For Carey, much of the insight needed for interpreting complex behavior data comes from grit and intuition rather than machine learning. Obtaining high-dimensional records of murine locomotion was the easier part, she says. “It took a lot of hard intellectual work to actually understand the data.”

She began by making hundreds of plots and simply looking at them. A breakthrough came after her student handed her a graph that was troubling her — it showed that the tails of animals without functional cerebellums moved more, with curious speed-dependent phase delays. Taking the printed graph to a seminar, Carey sat staring at it, scribbling calculations. Then suddenly she got it — without the cerebellum, the tail was moving passively. “It’s prediction, stupid!” she thought to herself. The mutants, she’d realized, were unable to predict the consequences of moving one part of the body, and so didn’t compensate for these consequences by adjusting how they moved another body part.

She concedes that this core idea features in established models of cerebellar function. But nobody had expected it to be apparent in mouse locomotion. “It led us to something consistent with existing theories of cerebellar function in a completely surprising context,” Carey says, affording opportunities to explore theory in new ways.

Subsequent behavioral quantification — involving a split treadmill where the mice’s left and right paws move at different speeds — has shown that temporal and spatial coordination are achieved separately in the cerebellum. The next frontier is discovering the nature of the signals the cerebellum uses to execute these computations.

Branson’s goals are similar. She wants to find neural computations that flies use to trick us into thinking they’re smart. “Flies have very small brains,” she says. “It’s not like they have a very complex representation of the world. Instead, they have a set of rules that they’ve encoded to make it look like a complex representation.”

She calls these rules ‘hacks’. She hopes that using computerized techniques to see imperceptible aspects of behavior — how flies navigate, for instance, or respond to external stimuli — will indicate their nature by revealing what exactly their brains are computing..

Asked what such hacks might look like, she thinks back to the postdoctoral adviser who introduced her to biology, Michael Dickinson and how he uncovered one. “He’s a remarkable person,” Branson says; “he could watch flies walk around and just see things.”

Once, Dickinson became interested in why flies don’t bump into other flies. After closely observing the animals and how they moved relative to one another, he speculated that their pattern of movement allowed them to employ a simple strategy, a hack as it were, using the direction of optic flow to distinguish their own movements from those of other flies. Subsequent experiments showed he was right.

“That’s the kind of things we’d like our algorithms to be able to do,” Branson says. “We would love to make a machine learning algorithm that’s smarter than Michael Dickinson.”