Analyzing a complex motor act at the mesoscopic scale

Michael Long, New York University

Liam Paninski, Columbia University

Attentional modulation of neuronal variability constrains circuit models

Marlene Cohen, University of Pittsburgh

Brent Doiron, University of Pittsburgh

Catching fireflies: Dynamic neural computations for foraging

Dora Angelaki, Baylor College of Medicine

Xaq Pitkow, Baylor College of Medicine

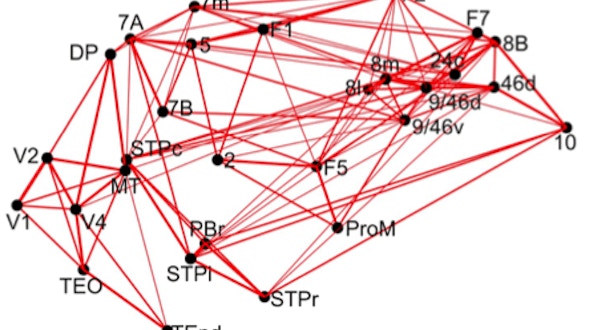

Circuit mechanisms of social cognitive computations

Winrich Freiwald, The Rockefeller University

Stefano Fusi, Columbia University

Liam Paninski, Columbia University

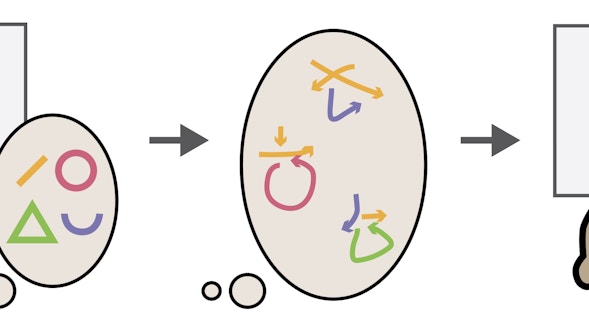

Computational principles of mechanisms underlying cognitive functions

Stefano Fusi, Columbia University

Daniel Salzman, Columbia University

Cortical mechanisms of multistable perception

Anthony Movshon, New York University

Corticocortical signaling between populations of neurons

Adam Kohn, Albert Einstein College of Medicine

Byron Yu, Carnegie Mellon University

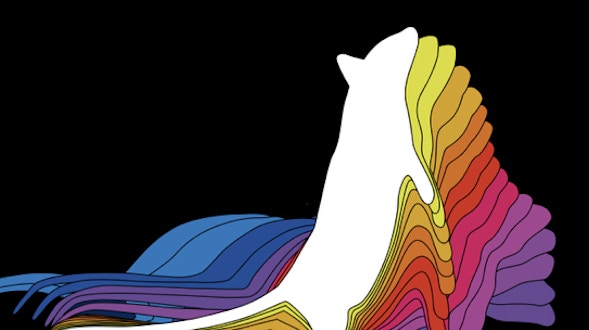

Decoding internal state to predict behavior

Bernardo Sabatini, Harvard Medical School

Ryan Adams, Harvard University

Sandeep Datta, Harvard Medical School

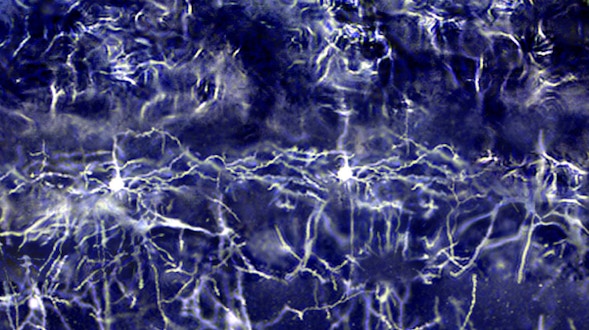

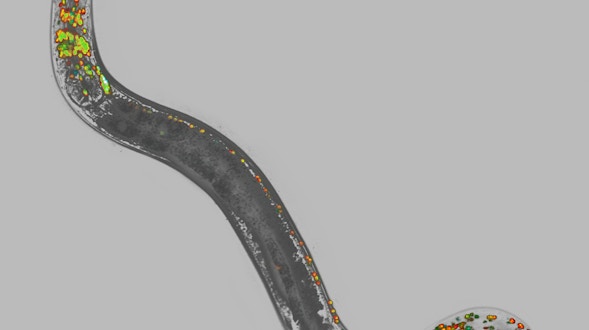

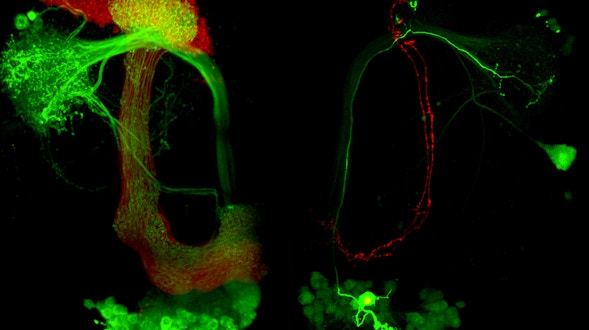

Dense Mapping of Dynamics onto Structure in the C. elegans Brain

Manuel Zimmer, IMP Research Institute of Molecular Pathology GmbH

Dynamics of neural circuits, representation of information, and behavior

Shaul Druckmann, Howard Hughes Medical Institute, Janelia Farm Research Campus

Karel Svoboda, Howard Hughes Medical Institute, Janelia Farm Research Campus

Global brain states and local cortical processing

Matteo Carandini, University College London

Kenneth Harris, University College London

Hidden states and functional subdivisions of fronto-parietal network

Roozbeh Kiani, New York University

Higher-Level Olfactory Processing

Larry Abbott, Columbia University

How the basal ganglia forces cortex to do what it wants

Joseph Paton, Fundação D. Anna de Sommer Champalimaud e Dr. Carlos Montez Champalimaud

Brian Lau, Institut National de la Santé et de la Recherche Médicale

Interaction of sensory signals and internal dynamics during decision-making

Anne Churchland, Cold Spring Harbor Laboratory

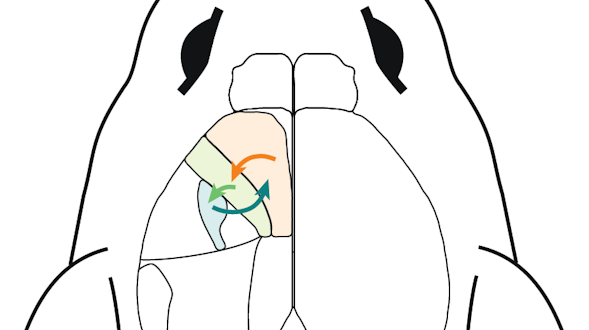

Large-scale cortical and thalamic networks that guide oculomotor decisions

Bijan Pesaran, New York University

Xiao-Jing Wang, New York University

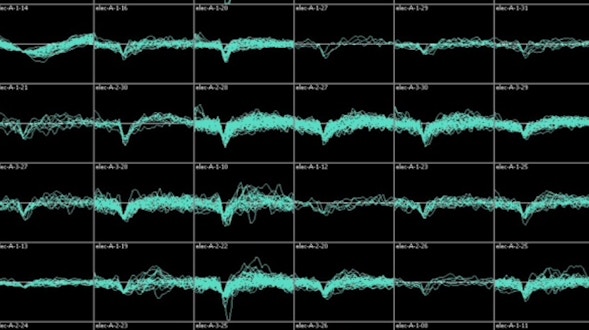

Large-Scale Data and Computational Framework for Circuit Investigation

E.J. Chichilnisky, Stanford University

David Brainard, University of Pennsylvania

Fred Rieke, University of Washington

Brian Wandell, Stanford University

Mechanisms of Context-Dependent Neural Integration and Short-Term Memory

Mark Goldman, University of California, Davis

Emre Aksay, Joan & Sanford I. Weill Medical College of Cornell University

Modulating the dynamics of human-level neuronal object representations

James DiCarlo, Massachusetts Institute of Technology

Network mechanisms for correcting error in high-order cortical regions

Lisa Giocomo, Stanford University

Surya Ganguli, Stanford University

Network properties and plasticity in high- and low-gain cortical states

Michael Stryker, University of California, San Francisco

Steven Zucker, Yale University

Neural Circuit Dynamics During Cognition

David Tank, Princeton University

Neural coding and dynamics for short-term memory

Ila Fiete, University of Texas at Austin

Neural computation of innate defensive behavioral decisions

Markus Meister, California Institute of Technology

David Anderson, California Institute of Technology

Pietro Perona, California Institute of Technology

Neural dynamics for a cognitive map in the macaque brain

Elizabeth A. Buffalo, University of Washington

Neural encoding and decoding of policy uncertainty in the frontal cortex

Zachary Mainen, Fundação D. Anna de Sommer Champalimaud e Dr. Carlos Montez Champalimaud

Alexandre Pouget, University of Geneva

Population dynamics across pairs of cortical areas in learning and behavior

Jonathan Pillow, The University of Texas at Austin

Spencer Smith, The University of North Carolina at Chapel Hill

Probing recurrent dynamics in prefrontal cortex

Valerio Mante, University of Zurich

William Newsome, Stanford University

Relating dynamic cognitive variables to neural population activity

Carlos Brody, Princeton University

Jonathan Pillow, The University of Texas at Austin

Maneesh Sahani, University College London

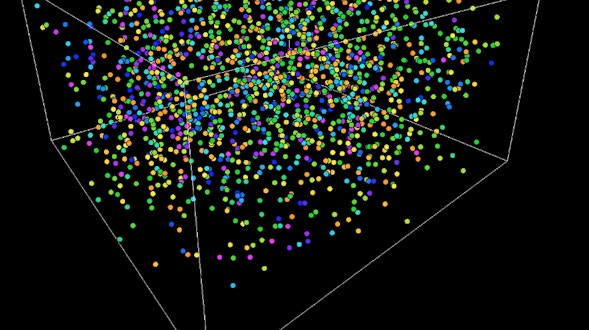

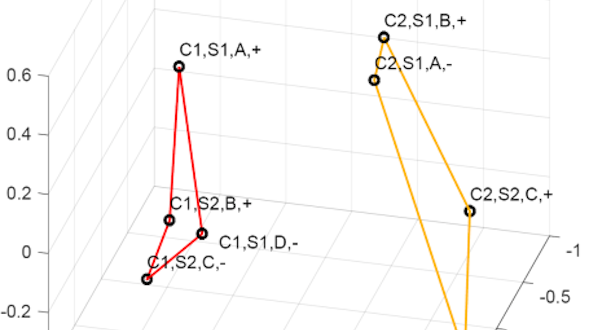

Searching for universality in the state space of neural networks

William Bialek, Princeton University

Single-trial visual familiarity memory acquisition

Nicole Rust, University of Pennsylvania

Eero P. Simoncelli, New York University

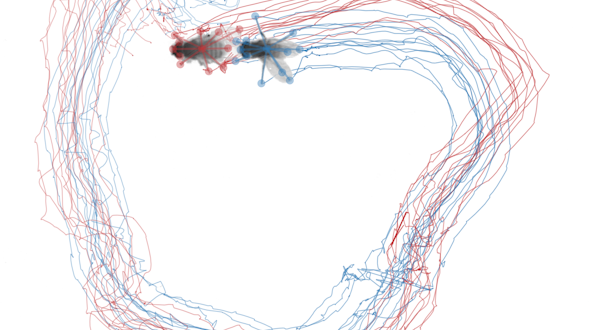

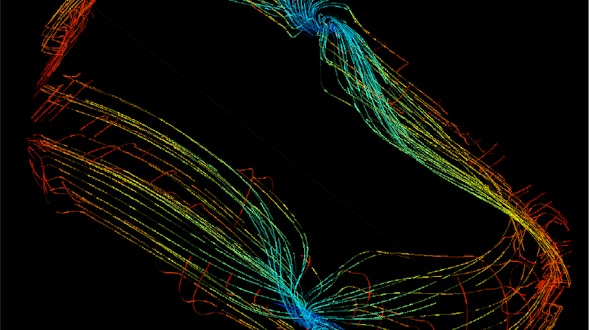

Spatiotemporal structure of neural population dynamics in the motor system

John Cunningham, Columbia University

Larry Abbott, Columbia University

Mark Churchland, Columbia University

Liam Paninski, Columbia University

The latent dynamical structure of mental activity

Maneesh Sahani, University College London

The neural basis of Bayesian sensorimotor integration

Mehrdad Jazayeri, Massachusetts Institute of Technology

The neural substrates of memories and decisions

Loren Frank, University of California, San Francisco

Uri Eden, Boston University

Towards a theory of multi-neuronal dimensionality, dynamics and measurement

Surya Ganguli, Stanford University

Krishna Shenoy, Stanford University

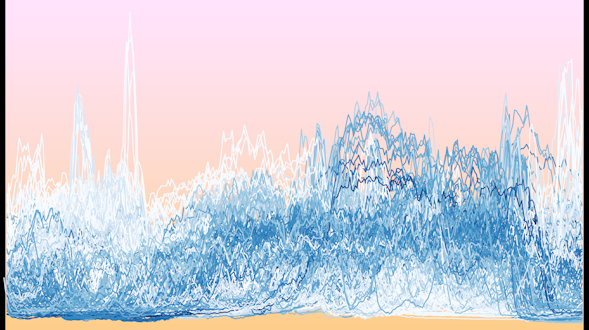

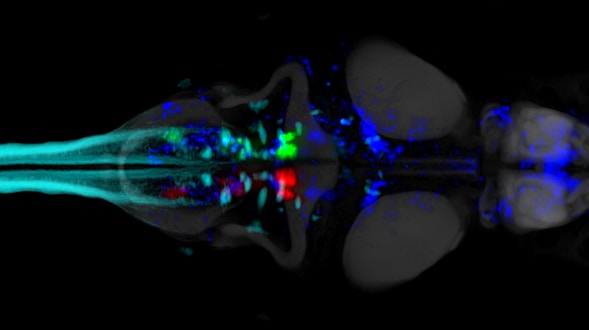

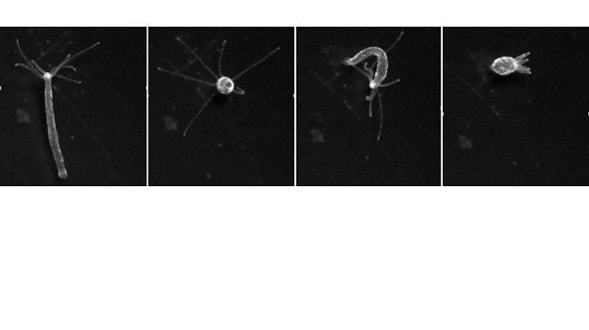

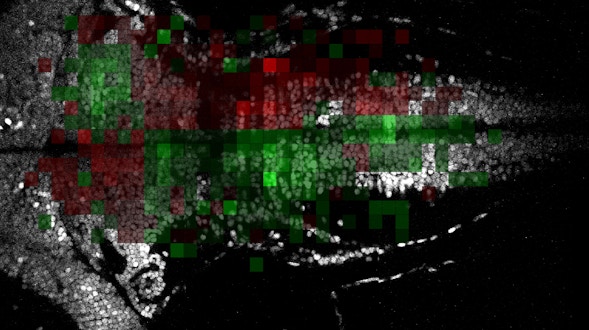

Toward Computational Neuroscience of Global Brains

Haim Sompolinsky, Harvard University

Florian Engert, Harvard University

Daniel Lee, University of Pennsylvania

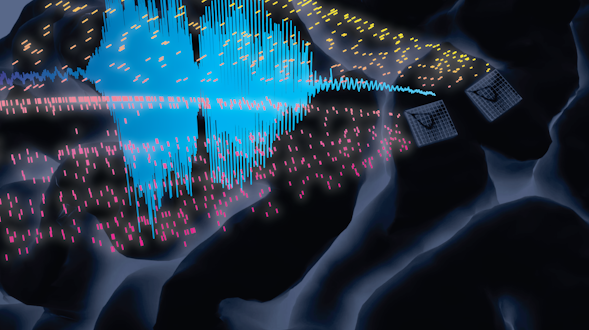

Understanding neural computations across the global brain

Misha Ahrens, Howard Hughes Medical Institute, Janelia Farm Research Campus

Larry Abbott, Columbia University

John Cunningham, Columbia University

Jeremy Freeman, Howard Hughes Medical Institute, Janelia Farm Research Campus

Liam Paninski, Columbia University

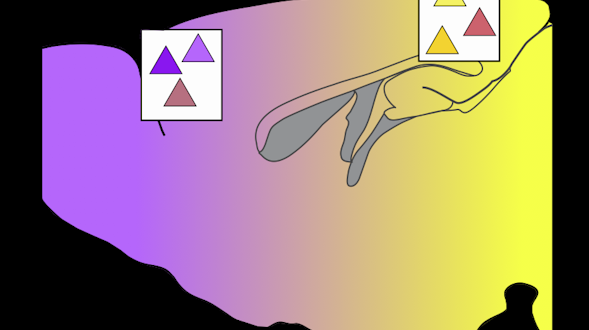

Understanding visual object coding in the macaque brain

Doris Tsao, California Institute of Technology

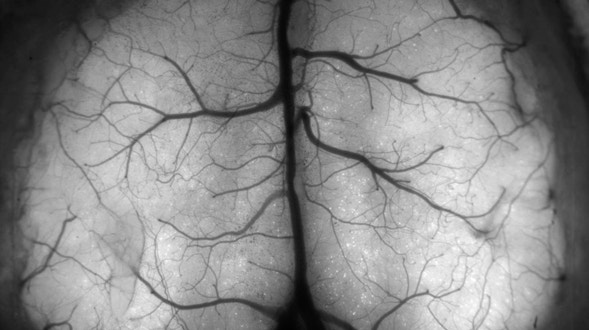

Whole brain calcium imaging in freely behaving nematodes

Andrew Leifer, Princeton University